The Use of Captions in Post-Secondary Institutions

Issues in Accessibility

Janine Verge, AuD, Aud (C) is coordinating the “Issues in Accessibility,” column which will cover topics addressing issues in accessibility for people who are Deaf/deaf and hard of hearing.

In this edition of “Issues in Accessibility,” audiologist Janine Verge along with Dalhousie University audiology students, Vincent Chow, Saidah Adisa, and Sylvia Ciechanowski discuss universal design and the benefits of captions in post-secondary institutions.

According to ASHA, “Universal Design for Learning (UDL) is a set of principles for curriculum development that gives all students an equal opportunity to learn. It provides a blueprint for creating flexible instruction that can be customized to meet individual needs. The principles of Universal Design propose adapting instruction to individual student needs through multiple means of:

- presentation of information to students (e.g., digital text, audio, video, still photos, images and all in captions as appropriate),

- expression by students (e.g., writing, speaking, drawing, video-recording, assistive technology), and

- engagement for students (e.g., choice of tools, adjustable levels of challenge, cognitive supports, novelty or varied grouping).”1

UDL benefits are specifically geared to students with disabilities; however, all students may benefit from the types of supports UDL provides.1 An example of this is captioning. Captions are “words displayed on a television, computer, mobile device, or movie screen, providing the speech or sound portion of a program or video via text. Captions allow viewers to follow the dialogue and the action of a program simultaneously.”2 Closed captions are hidden from the viewer until the captioning is activated whereas open captioning is permanently encoded into the film and integrated with the image. Subtitles, on the other hand, transcribe the dialogue only. It does not provide a text description of other sounds. Communication Access Realtime Translation (CART) is a word-for-word, near-verbatim, speech-to-text interpreting service used primarily for live events, such as meetings and lectures. Depending on the situation, a CART captioner may be present on site or may be in a different location, using the Internet to deliver the text to the consumer. When the CART captioner is off-site, the service is referred to as remote CART. A CART captioner has met the accuracy guideline if, after review, it is determined that the text meets a minimum accuracy level of 98%.3

There are many advantages for people who are Deaf/deaf or hard of hearing in using captioning, but it has also been shown to benefit all students. In a study conducted by Kmetz Morris et. al., results indicated that 99% of all students surveyed found closed captions to be helpful when taking online classes. Only 7% of those students surveyed were Deaf/deaf or hard of hearing. It was found 5% of students responded that captions were slightly helpful, 10% felt captions were moderately helpful, 35% said captions were very helpful, and 49% found captions to be extremely helpful. Qualitative responses to the student survey pointed to four distinct benefits; clarification, comprehension, spelling of keywords, and note-taking.4 In a study by Steinfeld, the benefit of real-time captioning in a mainstream classroom as measured by working memory was assessed. Results showed Deaf and hearing subjects were found to have similar abilities to recall written verbal material and that real-time captioning produced improved performance for both groups.5

A barrier for professors using captions in the classroom may be that many people think captions are intended or beneficial only to people who are Deaf/deaf or hard of hearing. In a study by Bowe and Kaufman which surveyed several hundred K-12 educators across 45 U.S. states, almost all reported they frequently showed videos in their classroom yet never turned on the captions. The minority who had, reported their students benefited from them.6

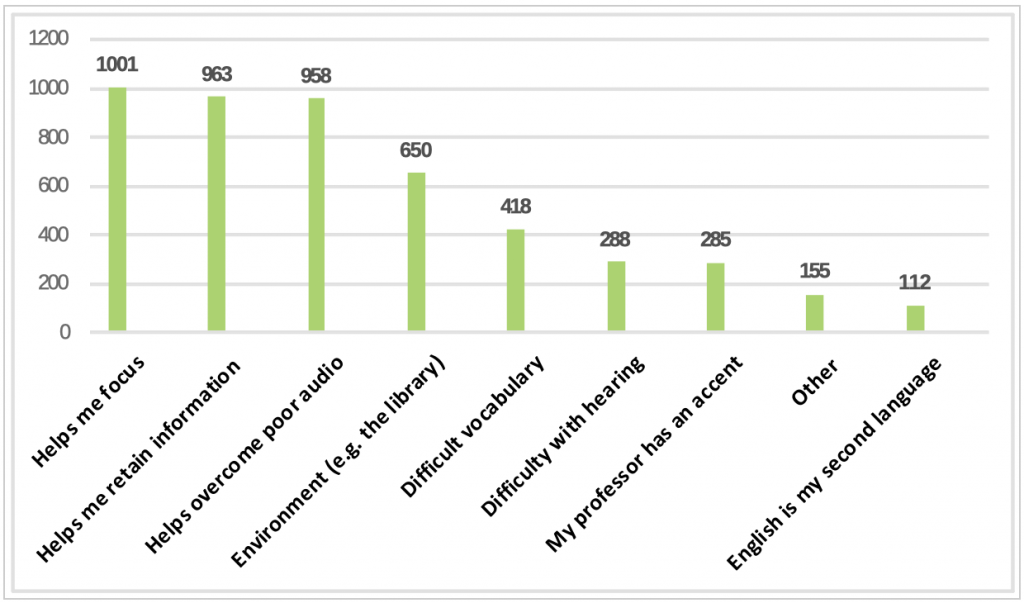

Oregon State University Ecampus Research Unit conducted a national survey with 3Play Media to learn more about how all students use and perceive closed captions in relation to their learning experiences in the college classroom. The study by Linder had 2124 respondents from 15 different institutions. When closed captions were used, most respondents reported it was beneficial because it helped them to focus, retain information, and to overcome poor audio. For a full list of reported benefits see Figure 1.

Figure 1. Why respondents used closed captions (n=2124).

Regarding the helpfulness of closed captions, the percentage of respondents who stated that closed captions were either “very” or “extremely” helpful to them was higher for many sub-groups including students with learning disabilities (60.6%), students who have difficulty with vision (64%), students who “always” or “often” have trouble maintaining focus (64.7%), students with other disabilities (65.4%), students registered with an Office of Disability Services (65.8%), ESL students (66%), students receiving academic accommodation (66.3%),and students who have difficulty with hearing (71.4%). It was found that students with disabilities use closed captions more often than students without disabilities, but the size of difference was small. Overall, 70.8% of survey respondents without hearing difficulties used closed captions at least some of the time. For all respondents, over 65% indicated that closed captions were very or extremely helpful.7

Hearing Loss in Post-Secondary Institutions

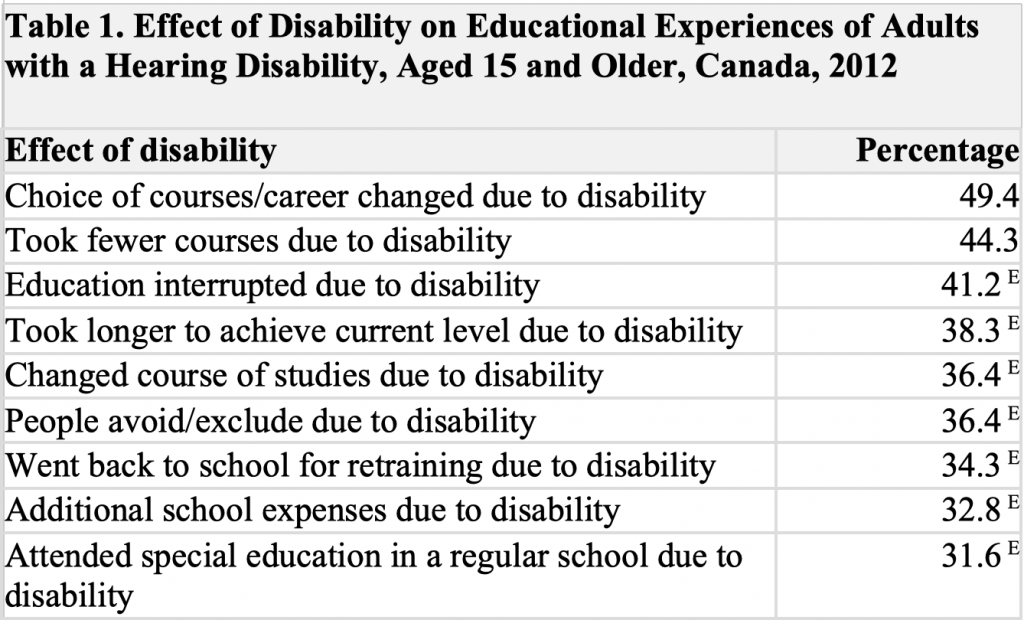

Hearing loss can create serious barriers in post-secondary institutions. The Statistics Canada Participation and Activity Limitation Survey (2006) found adults aged 15 to 64 with a hearing disability were more likely than those without any type of disability to have not completed high school (23.0% versus 13.1%) or to have a postsecondary qualification (50.3% versus 61.1%). Of the 9.4% participants who reported that they were currently or had recently been in school, 84.2% stated that their educational experiences were directly impacted by their disability. These impacts included: needing to change their choice of courses, taking fewer courses, and feeling excluded due to their disability. See table one for the most common impacts reported. Of the 24.6% survey participants who required some sort of education aid or service, 58.8% reported that all of their needs had been met, 38.6% reported that at least some of their needs had been met, while 2.6% reported that none of their needs had been met.8 These results indicate more support is needed for people who are Deaf/deaf and hard of hearing in post-secondary institutions.

Euse with caution

Note: Includes individuals currently in school or in school within the last five years and who had a disability while in school.

Source: Statistics Canada, Canadian Survey on Disability, 2012.

https://www150.statcan.gc.ca/n1/pub/89-654-x/89-654-x2016002-eng.htm

Universities have a duty to accommodate students with a disability and provide them with equal access to all their services. Failure to address the needs related to a disability is a breach of human rights. Having accommodation policies including captioning (open, closed, CART) is important considering hearing loss is common among students in university. According to Statistics Canada hearing loss is common. The Canadian Health Measures Survey (CHMS) reports of those surveyed, 40% of adults aged 20 to 79 and 15% aged 20 to 39 had at least slight hearing loss in one or both ears.9 Hearing loss can have a negative impact on interactive learning (e.g., classroom discussions, social interactions) and increases the effort required to communicate due to a number of factors such as the need for a greater signal to noise ratio compared to those with normal hearing, poor room acoustics, poor lighting, and large classroom sizes increasing distance to the professor and other classmates. A study by Cheesman, Jennings, and Klinger, recommended that rather than providing special assistance to only students with difficulty hearing who self-disclose for accommodation, a consideration of a more universal approach that maximizes communication by all students is warranted.10

To get a better understanding, watch this 3½ minute YouTube video called, “Don’t Leave Me Out! A Film about Captions in Everyday Life” produced by the Collaborative for Communication Access via Captioning Organization. https://www.youtube.com/watch?time_continue=170&v=w91A_nB4rx0

Harnessing the Power of Captions

It is an exciting time for people who are Deaf/deaf or hard of hearing due to voice recognition technology advancements. These allow subtitles to be generated by automated speech or voice recognition which facilitate the creation of many new applications on the market. This technology has been long in the making. Starting in 1952, the first voice recognition system (Audrey) could only understand digits spoken by a single voice. In 1962, IBM showcased “Shoebox,” a machine that could understand 16 spoken English words. Further developments improved voice recognition technology to distinguish between 4 vowels and 9 consonants. With this system of breaking down speech recognition and a lot of research funding in the 1970s, the “Harpy” speech understanding system and others like it were created. Harpy could understand 1011 words, similar to the vocabulary of a typical 3-year old.

In the 1980s, voice recognition systems increased vocabulary from several hundred to several thousand words. The reason for this colossal jump in performance was due to a new statistical method. This method would allow for the prediction of unknown sounds (words) through probability instead of matching sounds to templates. At this point the technology was finally effective enough to be commercialized. However, there still was a huge problem. These programs required discrete dictation, meaning you had to pause after each word.

With the advancement of faster processors in the 1990s, continuous speech recognition software became available. At the time, the systems could recognize about 100 words per minute but with only 80% accuracy under the most ideal conditions. The technological advancement would stall for about a decade before Google solved the processing bottleneck issue with its cloud data centers in 2008. This allowed for an exponential increase of processing power to analyse even more data in order to better predict words.11 With this advancement, it brings us to the current ever improving generation of voice recognition, voice-to-text, and dictation systems such as Dragon by Nuance, Google Docs, Google Translate, Apple Dictation, Interact-AS, Dictation.io, ListNote Speech-to-Text Notes, Speech Recogniser, and Windows 10 Speech Recognition.

There are also a number of editing software tools professors or students can use themselves to include captions or subtitles such as Amara.org, subtitle workshop, Jubler, Subtitle Creator, or SubMagic. Another advantage of either creating captions or subtitles or having real-time captioning beyond what was already mentioned above is that transcripts can be made and then used as study notes. In a recent article on captioning in Canadian Audiologist by Pam Millett, she warns though that transcripts from real-time captioning does not eliminate the need for notetaking services. Since the transcripts for a two-hour lecture may be up to 40 pages long, there would still be a need to read through and make additional notes which a note taker would help with. She recommends for best accessibility, both are usually required.12

Trying New Applications

Current voice-to-text applications are not yet without interfering error. Although they have come a long way, producing high-quality dictation with a low error rate and high speed is essential to their usability. Challenges still exist in overcoming multi-talkers, pre-training, using technical terminology, need for clear enunciation, microphone quality/distance to talker, lag/short translation time, lack of punctuation, and lack of information about other sounds happening besides the dialogue. We are coming close to a time, though, when auto-generated subtitles reach parity with human-transcribed captions.

As a group, we decided to try some of the new voice-to-text applications that could be used in a university setting. Although there are many, we decided on Microsoft Presentation Translator for PowerPoint and AVA, a voice-to-text application used in multiple communication settings. Microsoft Presentation Translator for PowerPoint provides live subtitles directly to the PowerPoint presentation and is available in over 60 languages. The live subtitles can be displayed on the PowerPoint or on a cell phone, and audience members can view the presentation subtitles via the Microsoft Presentation Translator app using a QR- or five letter conversation code. A transcript can also be saved for study notes. While presenting to peers, we found the overall experience was seamless and straightforward. Once the add-on is installed to PowerPoint, the app takes a few moments to scan the PowerPoint to familiarize itself with the text in the presentation. The standard laptop microphone or an external microphone may be used during the presentation. The subtitles are fairly rapid, and the majority of speech could be transcribed accurately. We found the accuracy was markedly improved with clear enunciation during the presentation. Another advantage is that the person lecturing can give microphone control to others in the room if they are linked with their phone so if other students have questions during the lecture it will also be transcribed. One drawback, as with all other voice recognition software, is that it is not true captioning in that it does not describe other sounds that are happening other than the dialogue. Considering the Microsoft PowerPoint Translator app is a free add-on available for download, we felt it holds great promise for future applications.

Informational Links

https://www.youtube.com/watch?v=6Pmtl5j5C3A

https://translator.microsoft.com/help/presentation-translator/

We also tried out an application called, “Ava,” which stands for audiovisual accessibility. It is a voice-to-text app used on android and apple devices which transcribes voice to text. By asking someone to download the app and using a QR code, the user can instantly connect with one or several people at a time such as a professor delivering a lecture or for communicating in a study group. Each device has its own microphone so can transcribe several people talking at once. The user can read what each person is saying since each phone connected has its own color code. It can also connect to a remote Bluetooth microphone and provide a student with a transcript of the conversation. After trying it, we found the application to be another exciting tool that students who are Deaf/deaf or hard of hearing may want to consider trying.

https://www.youtube.com/watch?v=BCq9KsnUs7U

https://www.ava.me/

Although the subtitles generated by automatic speech recognition software have not yet been perfected, the technology is improving quickly. There is great potential for the technology to be a valuable resource for people who are Deaf/deaf or hard of hearing in the coming years so Audiologists should stay current on these tools and educate their patients about them. In the meantime, captioning (closed, open, CART) has been proven to facilitate learning and communication for all students. Audiologists and student accessibility offices in universities/colleges should all be aware of and advocate for their use.

Resources

Canadian Hard of Hearing Association - How to Create Accessible Digital Content: A Guide for Service Providers

https://chha.ca/baf/documents/How_to_create_accessible_digital_content.pdf

Canadian Hard of Hearing Association- Full Access: A Guide for Broadcasting Accessibility for Canadians Living with Hearing Loss

https://chha.ca/baf/documents/Guide_for_Broadcasting_Accessibility_for_Canadians_Living_with_Hearing_Loss.pdf

Canadian Association of the Deaf – Captioning and Video Accessibility

http://cad.ca/issues-positions/captioning-and-video-accessibility/

Complying with AODA

https://www.aoda.ca/complying-with-the-integrated-accessibility-standards-ias-captioning-and-describing-web-videos/

Canadian Hearing Society: Classroom Accessibility for Students who are Deaf and Hard of Hearing

https://www.chs.ca/sites/default/files/mhg_images/CHS003_AccessibilityGuide_EN_APPROVED.PDF

Guidelines for CART Captioners

https://www.ncra.org/docs/default-source/uploadedfiles/governmentrelations/guidelines-for-cart-captioners.pdf?sfvrsn=584f6a61_10

The Cart Captioner’s Manual

https://www.ncra.org/docs/default-source/uploadedfiles/governmentrelations/cartmanual.pdf

Diagram showing layout of On-site versus Remote Captioning

https://neesonsreporting.com/wp-content/uploads/2016/08/CARTCHART.png

Collaborative for Communication Access via Captioning Organization

http://ccacaptioning.org/

The Seven Benefits of Captioning Videos in Higher Education Infographic

http://go.cielo24.com/hubfs/Infographics/EDU/7%20Caption%20Benefits%20EDU_GOV.png?utm_campaign=Infographics&utm_source=website&utm_medium=resources

References

- American Speech-Language-Hearing Association (ASHA). Universal Design for Learning. Available at: https://www.asha.org/SLP/schools/Universal-Design-for-Learning/

- NCRA. NCRA Broadcast and CART Captioning Committee Guidelines for ART Captioners (Communication Access Realtime Translation Captioners). 2016; Available at: https://www.ncra.org/docs/default-source/uploadedfiles/governmentrelations/guidelines-for-cart-captioners.pdf?sfvrsn=584f6a61_10

- NIDCD. Captions for Deaf and Hard-of-Hearing Viewers. National Institute of Deafness and Other Communication Disorders, U.S. Department of Health and Human Services. 2017; Available at: www.nidcd.nih.gov/health/captions-deaf-and-hard-hearing-viewers

- Kmetz Morris K, Frechette C, Dukes L, et al. Closed captioning matters: examining the value of closed captions for all students. J Postsecond Educat Disabil 2016;29(3):231–38.

- Steinfeld A. The benefit of real-time captioning in a mainstream classroom as measured by working memory. Volta Rev 1998;100(1):29–44.

- Bowe FG and Kaufman A. Captioned media: Teacher perceptions of potential value for students with no hearing impairments: A national survey of special educators. Spartanburg, SC: Described and Captioned Media Program; 2001.

- Linder K. Student uses and perceptions of closed captions and transcripts: Results from a national study. Corvallis, OR: Oregon State University Ecampus Research Unit; 2016.

- Statistics Canada. The 2006 Participation and Activity Limitation Survey: Disability in Canada. Statistics Canada Catalogue no. 12-89-628-X. Ottawa. Version updated Feb 2009. Ottawa. Available at: https://www150.statcan.gc.ca/n1/pub/89-628-x/2009012/fs-fi/fs-fi-eng.htm.

- Statistics Canada. Hearing Loss of Canadians, 2012-2015. Statistics Canada Issue no. 2016001. 2016; Available at: https://www150.statcan.gc.ca/n1/pub/82-625-x/2016001/article/14658-eng.htm.

- Chessman M, Jennings MB, and Klinger L. Assessing communication accessibility in the university classroom: Towards a goal of universal hearing accessibility. Work 2013;46:139–50.

- Pinola M. Speech Recognition Through the Decades: How We Ended Up with Siri. PC World. 2011; Available at: https://www.pcworld.com/article/243060/speech_recognition_through_the_decades_how_weended_up_with_siri.html?page=2.

- Millett P. Improving Accessibility with Captioning: An Overview of the Current State of Technology. Canadian Audiologist 2019;6(1). Available at: http://www.canadianaudiologist.ca/issue/volume-6-issue-1-2019/column/in-the-classrooms/.